AI composite photo from Inquirer stock photos

MANILA, Philippines—Technology has long been a crucial tool in enhancing electoral efficiency and security, but recent advances in generative AI are feared to threaten future elections worldwide, including in the Philippines.

Artificial Intelligence (AI) is a hot topic, sparking debate among tech experts, academicians, and the public. Opinions are divided on its usefulness and ethics, with some arguing that its benefits outweigh the risks, while others remain cautious about its potential dangers.

But what exactly is AI?

Will Douglas Heaven, senior editor for AI at the MIT Technology Review, briefly described AI as “a catchall term for a set of technologies that make computers do things that are thought to require intelligence when done by people.”

Encyclopaedia Britannica defined AI as the ability of a digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings.

“The term is frequently applied to the project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize, or learn from past experience,” Britannica said.

In electoral processes, experts see AI as a tool to boost efficiency and voter engagement by leveraging big data to shape voter choices. Conversely, there are concerns that AI’s misuse could destabilize democracy, compromise political discourse, and erode public trust.

AI improving electoral processes?

Citing UNESCO’s Guide for Electoral Practitioners, the United Nations Regional Information Centre for Western Europe (UNRIC) said AI has the potential to enhance the efficiency and accuracy of elections.

“It reaches out to voters and engages with them more directly through [personalized] communication tailored to individual preferences and [behavior],” said UNRIC.

The UN agency noted that AI-powered chatbots can deliver real-time information about polling locations, candidate platforms, and voting procedures, enhancing accessibility and transparency in the electoral process.

UNRIC also detailed that AI can significantly enhance data management in electoral processes by ensuring accurate collection, storage, and analysis of extensive electoral data. This capability allows officials to make swift decisions and identify trends more effectively.

“Automated systems make election administration more efficient by managing large datasets with speed and precision, significantly reducing human errors,” the UN agency said.

“This leads to more reliable and timely results, thereby reinforcing public trust in the electoral process,” it added.

Additionally, UNRIC stated that AI could bolster electoral security by enhancing cybersecurity measures, detecting anomalies, and identifying fraudulent activities. The agency said this would ensure the integrity and resilience of the electoral infrastructure against cyber threats.

GRAPHIC BY ED LUSTAN

Daniel Innearity, a prominent Spanish philosopher, essayist, and professor of political and social philosophy at the University of Basque Country, highlighted in a UNESCO report that AI gathers valuable insights from user comments and enables real-time data analysis for campaign strategists to adjust approaches based on public opinion.

However, despite these supposed benefits, the UN agency acknowledged how AI could also cause multiple risks, including the potential to manipulate voters and influence their decisions.

“[A]I tools can also be used by those with malicious intent. AI models can help people to harm themselves and each other, at massive scale,” said UN Secretary-General António Guterres.

Deepfakes and disinformation

UNRIC warned that one of the most critical risks posed by AI in elections is the potential spread of disinformation.

The organization specifically highlighted the danger of AI-generated deepfakes — highly realistic but fake audio, video, and images — that can mislead voters and erode trust in the electoral process.

Deepfakes, which are manipulated media depicting people saying or doing things they never did, are often used to mislead the public about candidates’ statements, positions on issues, and even the occurrence of certain events.

In the Philippines, ahead of the 2025 midterm elections and the 2028 presidential polls, ABS-CBN’s primetime newscast “TV Patrol” was targeted by a deepfake TikTok video. The video falsely claimed a survey projecting Senator Robinhood Padilla as the front-running candidate in the presidential race.

The network eventually disowned the “false and fabricated” clip, which also contained manipulated images and voices of a news anchor, a reporter, and a political analyst.

READ: TikTok video on 2028 election survey ‘false, fabricated’ – ABS-CBN News

“The growing sophistication of AI-generated content makes disinformation more convincing and emotionally impactful. It is also easier to create and increasingly challenging to detect and counter,” the UN agency said.

The Campaign Legal Center (CLC), a US-based nonprofit government watchdog group, also emphasized the risk of unchecked AI use, such as deepfakes, infringing on voters’ fundamental right to make informed decisions.

GRAPHIC BY ED LUSTAN

“We now have the danger of deepfakes. What we’re seeing in the elections, for example, in the US and in India, you have deepfakes of celebrities endorsing politicians even if [they’ve] never done it,” said journalism professor Rachel Khan. “That’s what we need to stand guard for,” she added.

READ: Analysts warn vs AI, ‘deepfakes’ in 2025 polls

In the Philippines, the Commission on Elections (Comelec) previously stated it is considering banning AI for campaign materials in the 2025 elections. In a memorandum dated May 28, Comelec Chairperson George Garcia pointed out that the threat of AI technology and “deep fake” is among the growing concerns of several election management experts.

“The abuse of AI technology and ‘deep fake’ videos undermines the integrity of elections and the credibility of public officials, candidates, and election management authorities,” the memorandum emphasized.

“The misuse of this technology in campaign materials such as videos, audios, or other media forms may amount to fraudulent misrepresentation of candidates. This defeats the very purpose of a campaign, which is to fully and truthfully inform the voting public about the elections and the candidates,” it added.

More misinformation

Experts also warned how AI could significantly disrupt electoral processes through the spread of misinformation and biases. An example of this is AI’s potential to manipulate voters’ perceptions of candidates by distributing false information designed to suppress voter turnout.

GRAPHIC BY ED LUSTAN

“Bad actors could use AI tools to create and distribute convincingly false messages about where or when to cast a ballot, or to discourage voters from showing up to their polling locations in the first place,” explained Adav Noti, executive director of CLC.

This concern underscores the findings of the World Economic Forum’s Global Risks Report 2024, which identified manipulated and falsified information as the world’s most severe short-term risk.

“The presence of misinformation and disinformation in these electoral processes could seriously destabilize the real and perceived legitimacy of newly elected governments, risking political unrest, violence and terrorism, and a longer-term erosion of democratic processes,” the report stated.

Biases, ethical challenges

UNRIC stressed that the implications of AI extend beyond voter manipulation.

“AI-enabled cyberattacks on critical infrastructure could seriously affect global peace and security as the technical and financial barriers to accessing AI tools are low,” said Guterres.

The UN agency added that using AI in the elections involves “collecting and [analyzing] vast amounts of personal data,” which raises significant privacy concerns and necessitates robust data protection measures to maintain voter trust and comply with privacy laws.

GRAPHIC BY ED LUSTAN

Moreover, the ethical challenges posed by AI in elections could also be significant. AI algorithms can be manipulated to favor certain candidates or parties, either intentionally or unintentionally.

UNRIC explains that “biases in AI systems can arise from the data used to train them or the algorithms’ design,” which can reinforce existing forms of discrimination, prejudice, and stereotyping. Such biases can unfairly influence voter behavior, compromising the fairness and integrity of the electoral process.

PH preparedness

The risks of AI in the electoral processes, UNRIC said, underscore the need for clear ethical guidelines and careful oversight to ensure AI is used responsibly.

But how does the Philippine government fare when implementing AI to deliver public services to its citizens?

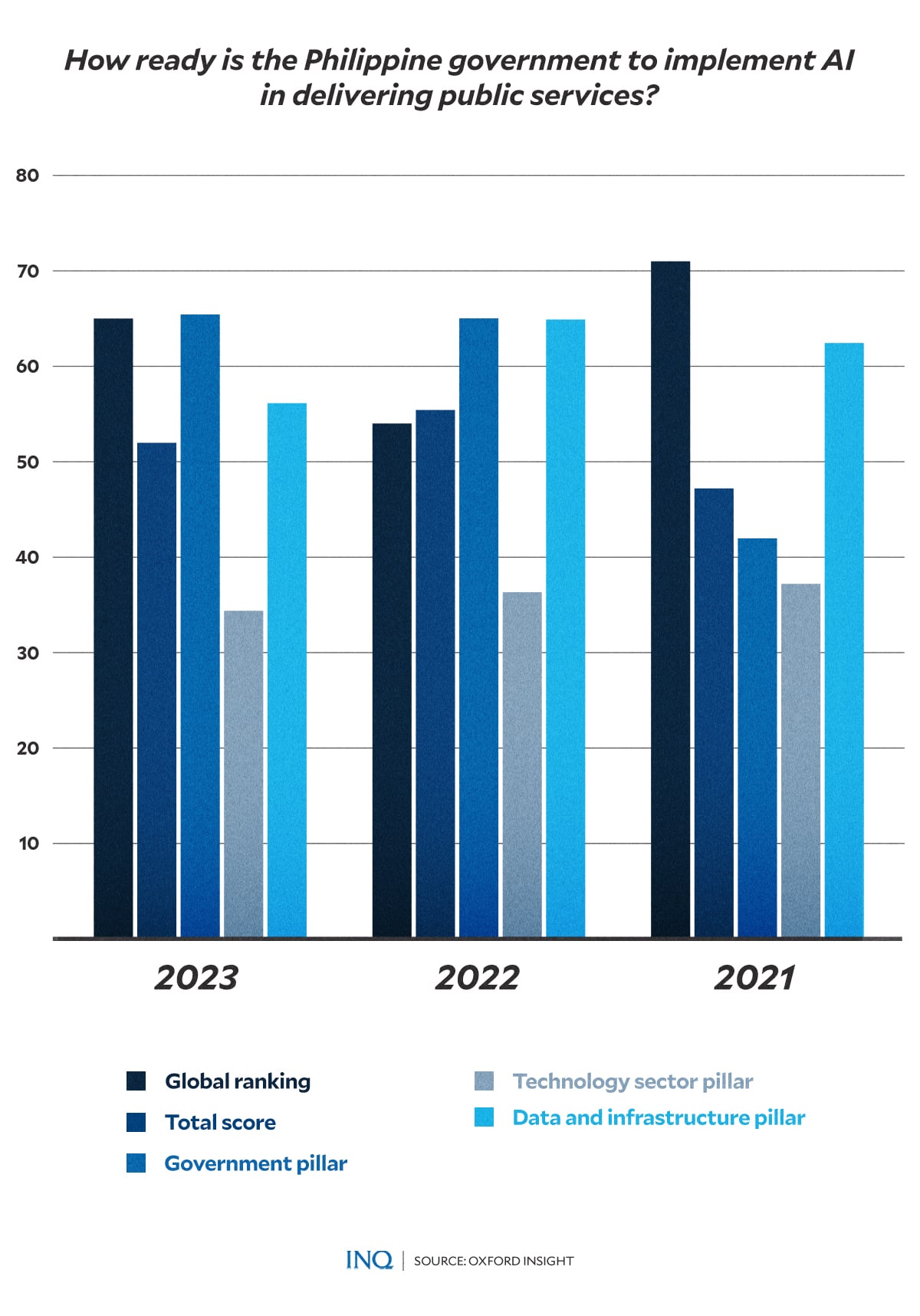

According to Oxford Insight’s Global AI Readiness Index, the Philippines ranked 65th globally in 2023, reflecting a decline from 54th in 2022 and 71st in 2021. The index evaluates several pillars, such as government, technology sector, and data infrastructure.

The report emphasized that a government’s effective use of AI relies on a mature technology sector with a robust supply of AI tools. This sector should exhibit high innovation capacity, supported by a conducive business environment, strong R&D (research and development) investment, and skilled human capital.

However, last year, the Philippines’ score in the technology sector pillar was only 34.38, lower than 36.33 in 2022 and even lower than 37.20 in 2021.

GRAPHIC BY ED LUSTAN

“If a country’s domestic tech sector is too immature or lacking in human capital or innovation capacity to create adequate AI tools, governments may be forced to turn to foreign companies, likely in higher-income countries, to procure AI services,” Oxford Insight explained.

The public sentiment towards AI in the Philippines reveals a complex picture.

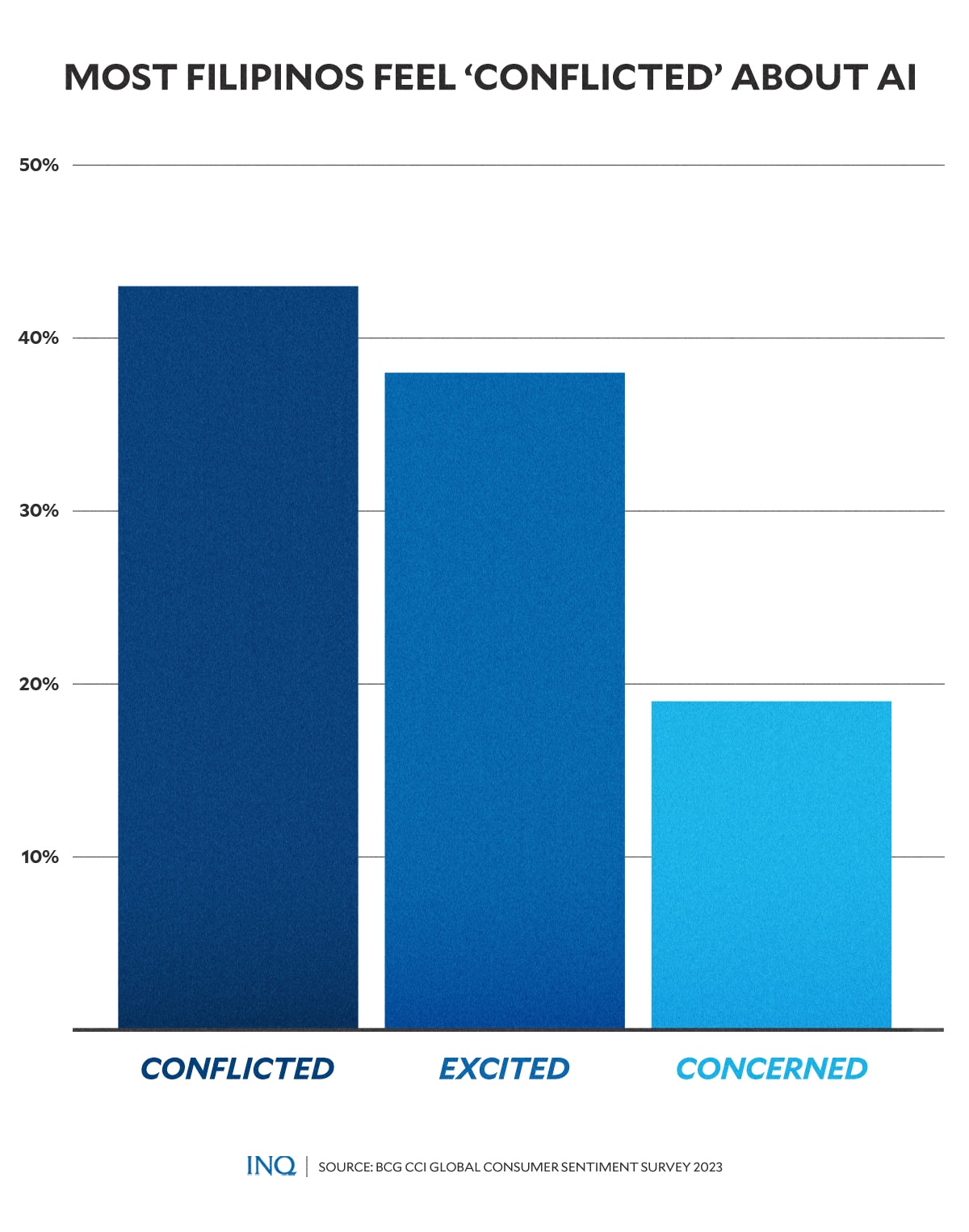

A 2023 Boston Consulting Group (BCG) survey revealed that most Filipinos feel ‘conflicted’ about AI, with 43 percent expressing this sentiment. Meanwhile, 38 percent of Filipinos are excited about AI, appreciating its potential benefits and advancements. However, 19 percent remains concerned and wary of the possible risks and ethical implications associated with AI technologies.

Information disorders

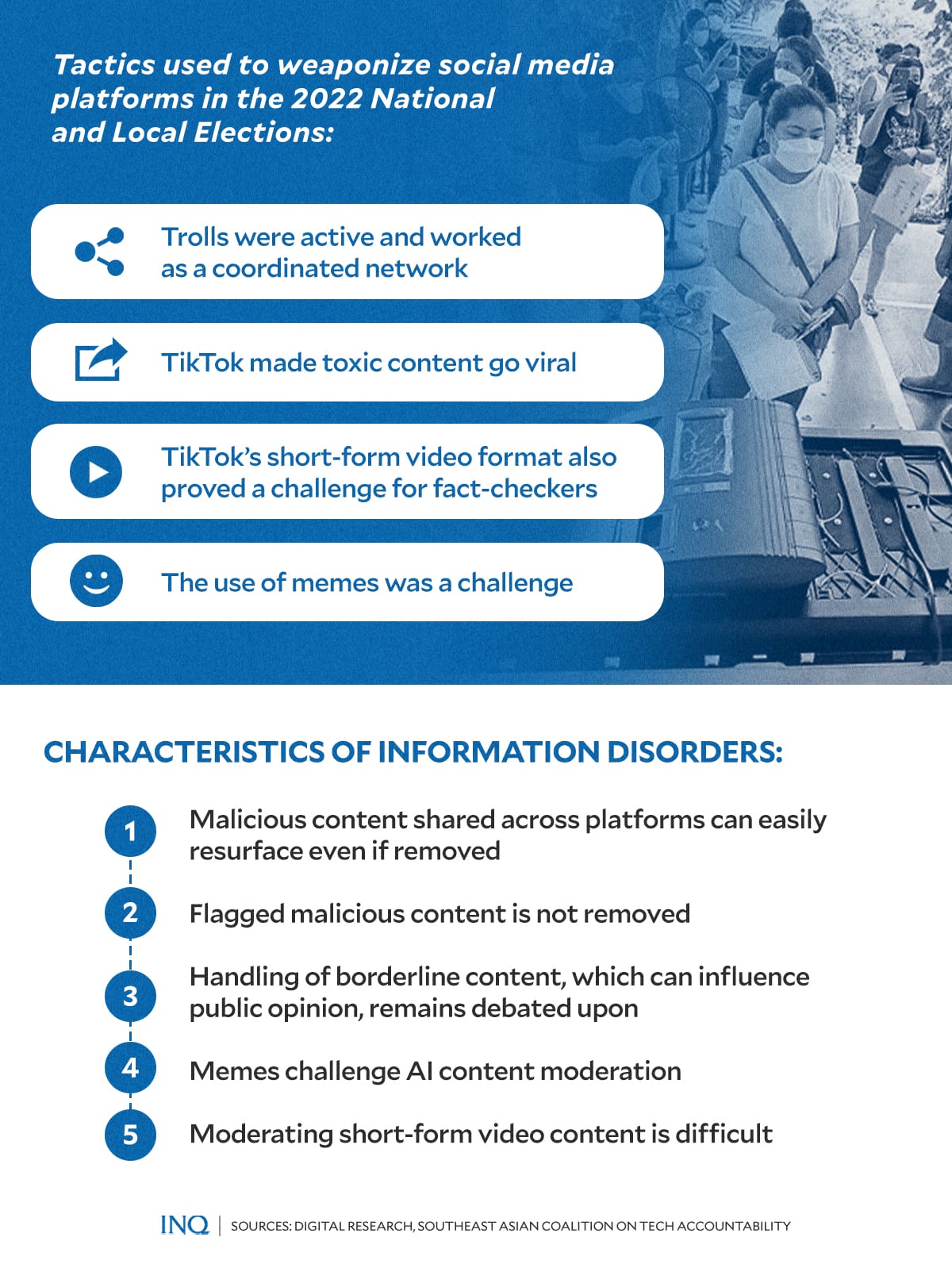

A report by DigitalResearch and the Southeast Asian Coalition on Tech Accountability (SEACT) found that during the 2022 presidential elections in the Philippines, content that wasn’t necessarily deceitful but could influence public opinion was widely circulated, alongside disinformation and misinformation.

The report explained that “information disorders” — the intentional or unintentional spread of false information, including disinformation, misinformation, and mal-information — made it harder for social media platforms to remove unreliable content. These challenges included:

- Malicious content reappearing across platforms after being removed.

- Flagged malicious content sometimes not being removed by social media platforms, raising concerns about their commitment and transparency.

- Debates among social media platforms about handling borderline content that can influence public opinion.

The report highlighted that specific types of content, such as memes, pose challenges for AI content moderation due to the difficulties in interpreting the combined images and text.

“As platforms use artificial intelligence to help them with content moderation, memes are more challenging to assess as they often blend photo and text together in one image,” the report said.

The report also identified another issue with AI content moderation: managing video content, especially short-form videos on platforms like TikTok, which involve non-verbal language and are often broadcast live.

“In the case of both memes and short-form videos, human moderators and fact-checkers are more effective. However, this is time-consuming and poses a real challenge due to the high volumes,” the report explained.

“The diversity of languages and cultures in the Philippines only adds to the difficulties of content moderation,” it continued.

RELATED STORY: One Tech Tip: How to spot AI-generated deepfake images