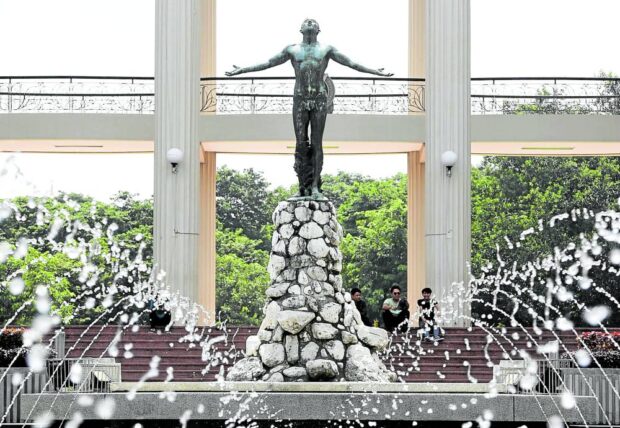

FIRST STEPS The University of the Philippines is taking the first steps in formulating guidelines and policies on artificial intelligence, drafting 10 “principles” on its use and development amidits expansion into many facets of the daily life of the public. —INQUIRER FILE PHOTO

The University of the Philippines (UP) has released a draft set of guiding principles on the “responsible use” of artificial intelligence (AI), in the first such move by the national university to deal with the expansion of various applications like the popular ChatGPT.

On Wednesday, UP said that it was “considering a policy on AI” in response to the rapid development and adoption of the technology, especially in an academic environment.

It released a draft text outlining the “UP Principles for Responsible Artificial Intelligence” use and development in the university and for the public good. It asked students, teachers and other members of the UP community to make suggestions to improve the draft.

Other universities in the country have not made public similar efforts, if any, in the use or deployment of AI by their constituents.

“AI makes our lives easier by automating tasks and providing us with information and recommendations tailored to our individual needs. It also has the potential to transform education. It could enhance the personalized learning, increase student engagement in learning, and improve education management,” UP said in a statement.

Potential problems

It acknowledged, however, that the rise of AI includes some risks and potential problems.

In the academic setting, challenges from AI “range from systemic bias, inequality for marginalized groups of students, privacy and bias in data collection and processing.”

“Already, many are worried that ChatGPT opens the door to cheating and plagiarism,” the statement said. “It is therefore imperative for the national university, to promote the positive use and mitigate the negatives of AI.”

The effort comes months after UP faculty members urged the university to review its academic integrity policies to cover the use—or misuse—of AI for students in completing academic requirements.

This was prompted by a history professor’s disclosure that he suspected one of his students was using ChatGPT, one language-learning application that can answer “prompts” and generate useful texts based on information taken from the internet, to write a final exam essay.

A matter of time

Eduardo Tadem, UP Asian Studies professor emeritus, said these guidelines—even in the draft stage—were a “welcome development as UP really needs a policy not just for students but also for faculty members who may use AI for their research.”

“It’s just a matter of time, especially since UP is such a huge university,” he said. “There is much more urgency to come up with something.”

Tadem, a longtime scholar, himself has used the Open AI tool ChatGPT to aid in his research for a monograph discussing inequality, tax justice and the Philippine wealth tax campaign, which was published on June 30 by the UP Center for Integrative and Development Studies.

From his experience, he said any policy tackling AI, especially in fulfilling academic requirements, should have “the same standards for citation.”

“If students use AI to look up information, they should double-check for accuracy and cite the right reference material,” Tadem said.

“I personally advocate for the use of AI, as long as it’s used as a tool,” he said. “What it really boils down to is whether anybody wanting to use AI has discernment to sift through what is credible and not.”

No to blanket ban

In January this year, the UP Diliman AI program faculty noted that AI could generate much more than just essays.

AI can also be used for graphic design (like the Stability AI and Midjourney websites), content generation (Jasper AI), automatic computer programs (CoPilot and Ghostwriter), voice mimicry (VALL-E) and synthetic human videos (Synthesia AI), the group said.

At the same time, it cautioned against a blanket ban on AI in university work, citing how other top-ranking universities in other countries were using the technology to innovate and improve academic work.AI is already being used in many applications outside a university setting, often quietly and without people realizing it or just taking it for granted.

It is used in language translators and voice assistants in cellphones, as virtual health aides for patient care and monitoring, or in checking blood sugar levels for diabetics, and in satellite data analysis of changes in the climate and environment.

For now, the principles outlined by UP do not include specific policies on the do’s and don’ts in the use of AI. However, they lay down the basic points on what constitutes responsible AI use, development and promotion.

Among others, it said that university faculty, researchers, staff and students developing, deploying or operating AI systems “should be held accountable for their proper functioning.” It did not elaborate on the extent of that accountability.

AI systems should also be “transparent so that people can understand how these systems work and make decisions.”

“Individuals should be informed when AI-enabled tools are being used. The methods should be explainable, to the extent possible, and individuals should be able to understand AI-based outcomes, ways to challenge them, and meaningful remedies to address any harms caused,” it added.

It also calls on AI researchers and developers to establish and monitor targets in AI development to ensure inclusion, diversity and equality; and to collaborate with the university, private sector and the government to promote transparency.

Any use of AI systems, they said, should be assessed for bias and discrimination to prevent further harm and must also “function in a secure and safe way.”

Most of all, the university said, AI “should benefit the Filipino people by fostering inclusive economic growth, sustainable development, political empowerment and enhanced well-being, among others.”

“It is vital to harness inter-sectoral, interdisciplinary and multi-stakeholder expertise to inform decision making on AI. Privacy is a value to uphold and as a right to be protected,” it added.

RELATED STORY:

How AI is already improving daily life